Nvc++ is the HPC C++ compiler and does not currently support compiling CUDA C programs.

CMAKE CUDA INSTALL

There’s no need to install them separately, but you certainly can configure the compilers to use you’re own CUDA SDK installation.ĭetails about the CUDA installations and configuration can be found in the HPC Compiler’s User’s Guide: HPC Compilers User's Guide Version 22.7 for ARM, OpenPower, x86Īs noted in the documentation, the HPC Compilers do check the CUDA Driver version on the system to set the default CUDA version to use, but this is easily overridden via command line options or setting environment variables such as CUDA_HOME. “10.1”, “10.2”, and “11.0” are the various CUDA installations packaged with the compilers for convenience. No need to reinstall them if you install a new version of the compilers. “2020” is a common directory for packages, such as OpenMPI or NetCDF, shared by all HPC Compilers released in 2020. Additional releases can be co-installed, so if you install the up coming 20.7 release, it would be installed next to 20.5 but not overwrite it. “20.5” is the HPC Compiler (formerly PGI) installation for the 20.5 release. So fixing where cmake finds the CUDA install’s root directory, may allow these directories to be found as well.

The problem is that ANY location such as /usr/local/cuda cannot refer to both of these trees simultaneously.Īgain, I’m not an expert in cmake, but my assumption would be that CUDA_INCLUDE_DIRS and CUDA_CUDART_LIBRARY refer to directories under the root CUDA installation. Though not being an expert on cmake myself, questions on using cmake are probably best addressed by Kitware (the makers of cmake).

Looks like you may need to set " `-DCUDAToolkit_ROOT=/path/to/cuda/installation", when using installations other than “/usr/local/cuda”. I did find the following page: FindCUDAToolkit - CMake 3.24.2 Documentation.

CMAKE CUDA FREE

Given users are free to install the CUDA SDK in any base location they like, my assumption is that the locations of a CUDA installation cmake uses is configurable. I also note that nvc++ is located ONLY here, with no other versions anywhere else: In an earlier thread it sounded like I need to match one of these with the version number of the driver, but the SDK documentation is silent. Is one set of include files not necessary for building a package with cmake?Īlso, the SDK has a combination of options: The problem is that ANY location such as /usr/local/cuda cannot refer to both of these trees simultaneously. I tried them anyway but that did not fix anything. Setting variables like CUDA_HOME are intended for using ‘make’ rather than ‘cmake’, which is not normally supposed to need such settings. I ‘installed’ the HPC_SDK package by running the ‘install’ command that came with it, and it put the various files along two trees starting with /opt/nvidia/hpc_sdk. I conclude from this that the HPC_SDK CUDA distribution is non-standard otherwise, cmake would find it. In which it DID succeed in finding OpenMP and MPI,Īnd with CUDA specified as an included package, I get the following:ĬMake Error at /usr/local/share/cmake-3.18/Modules/FindPackageHandleStandardArgs.cmake:165 (message):Ĭould NOT find CUDA (missing: CUDA_INCLUDE_DIRS CUDA_CUDART_LIBRARY) (found However, when I run the initial cmake process, I did specify a path to nvcc, per the stated search behavior.

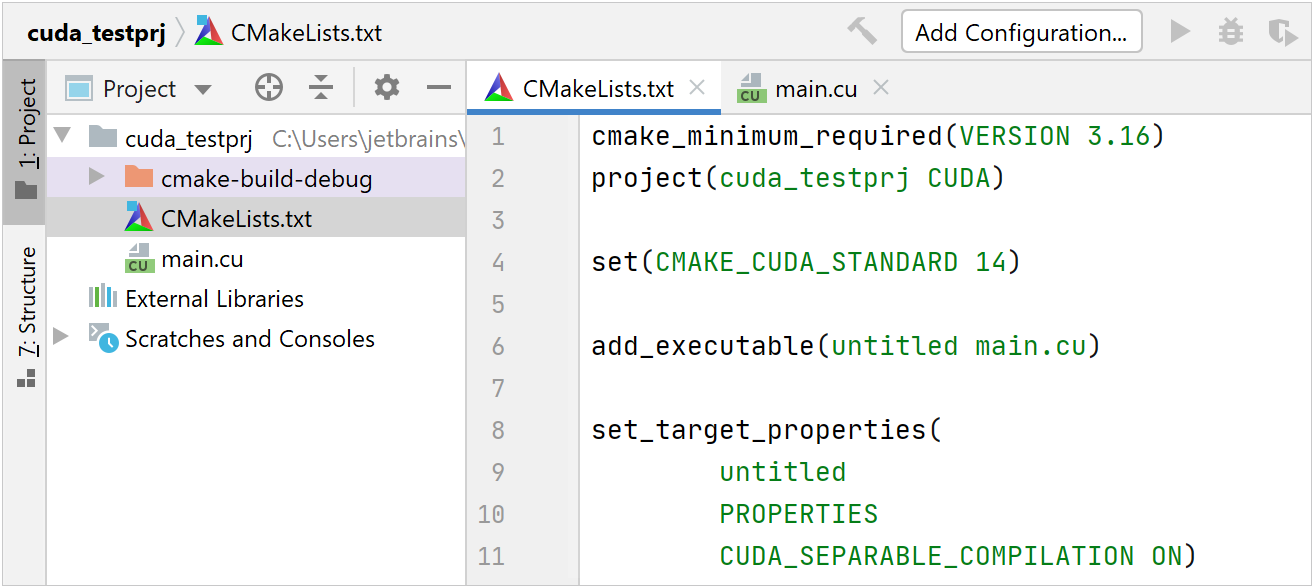

So there is NORMALLY no need to specify any paths. cmake has announced that CUDA is one of the packages that it can ‘find’ through a typical build process:

CMAKE CUDA HOW TO

I think ultimately what you’re asking is how to have the LAMMPS build use the CUDA install that ships with the HPC SDK? Instruction are found here: Looks like you need to set CUDA_HOME to the directory of the CUDA version you wish to use, either the those that are installed with the HPC SDK or via the CUDA SDK.

CMAKE CUDA DOWNLOAD

You certainly can still download the CUDA SDK separately if you wish. This could be installed using the stand alone CUDA SDK, which is often install in “/usr/local/cuda” or “/opt/cuda” but really could be installed anywhere the user wishes.Īs part of the HPC SDK, the CUDA components are bundled as well, but mostly for convenience of users. The environment variable CUDA_HOME is the base location for you’re CUDA installation. I guess I’m not quite understanding the basis for this questions, but the first are the include files specific to the CUDA 10.2 release while the second is the location of the include compiler include files. Put another way - there are header files here:

0 kommentar(er)

0 kommentar(er)